Hello! I am a final year Ph.D. student in the Institute for Robotics and Intelligent Machines, Georgia Institute of Technology, advised by Prof. Patrico A. Vela.

In the summers of both 2024 and 2025, I was a Research Intern at Microsoft Mixed Reality, collaborating with Dr. Ben Lundell and Dr. Harpreet Sawhney. In summer 2023, I was a Applied Scientist intern at Amazon Robotics, working with Dr. Sisir Karumanchi and Dr. Shuai Han.

My research interests are in computer vision, language processing, and their integration to advance robotic intelligence. Specifically, my work spans Robotic Grasping (both 6-DoF and planar), Language Command Understanding, and addressing Open World challenges. More recently, I have been developing algorithms that leverage Large Language Models and Vision-Language Models to enable generalizable planning and spatial understanding.

You can find my resume here (updated May 2025).

Update: I am actively seeking full-time positions in industry! I am happy to connect regarding potential opportunities!

🔥 News

- 2025.11: 📝SG2 has been accepted to AAAI 2026!

- 2025.05: 💻Excited to come back to Microsoft Mixed Reality team as a research scientist intern.

- 2025.02: 📝GASP has been accepted to CVPR 2025!

- 2024.05: 💻Excited to join Microsoft Mixed Reality team as a research scientist intern.

- 2023.07: 📝WDiscOOD has been accepted to ICCV 2023!

- 2023.06: 📝KGNv2 has been accepted to IROS 2023!

- 2023.05: 💻Excited to join Amazon Robotics stow perception team as an applied scientist intern.

- 2023.01: 📝LEAP has been accepted to ICLR 2023!

- 2023.01: 📝KGN has been accepted to ICRA 2023!

- 2021.01: 📝CGNet and DLSSNet have been accepted to ICRA 2021!

📖 Educations

- 2021.01 - 2025 (Expected): Ph.D. in Electrical and Computer Engineering, Georgia Tech. Advised by Dr. Patricio A. Vela. Atlanta, GA, United States.

- 2019.08 - 2020.12: M.S. in Electrical and Computer Engineering, Georgia Tech. Atlanta, GA, United States.

- 2015.09 - 2019.06: B.E. in Aerospace Engineering, Beihang University. Beijing, China.

💻 Industrial Experience

- 2025.05 - 2025.08: Research Scientist Internship, Microsoft Mixed Reality.

- Mentor: Ben Lundell;

- Topic:Inspecting the vision-action alignment in Vision-Language-Action (VLA) models.

- Redmond, WA, United States.

- 2024.05 - 2024.08: Research Scientist Internship, Microsoft Mixed Reality.

- Mentor: Ben Lundell; Co-Mentor:Harpreet Sawhney

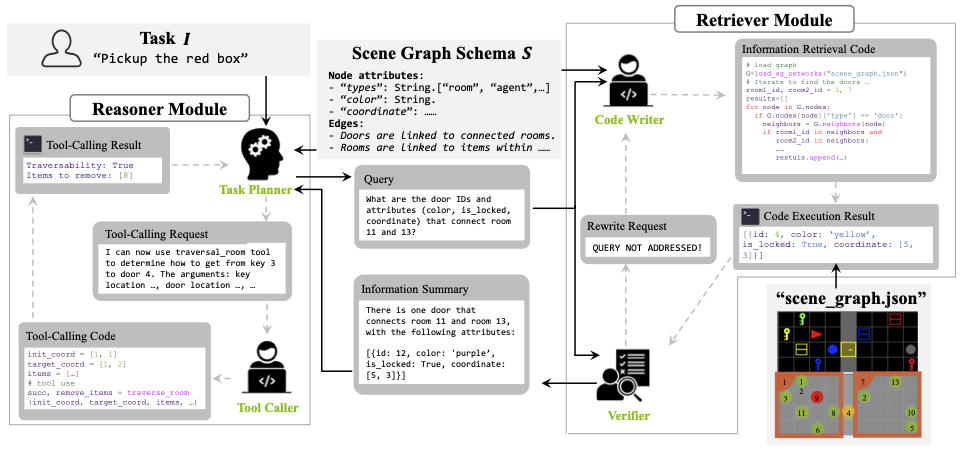

- Topic: Reasoning on scene graphs with Large Language Models (LLMs).

- Redmond, WA, United States.

- 2023.05 - 2023.08: Applied Scientist Internship, Amazon Robotics.

- Manager: Sisir Karumanchi; Mentor:Shuai Han

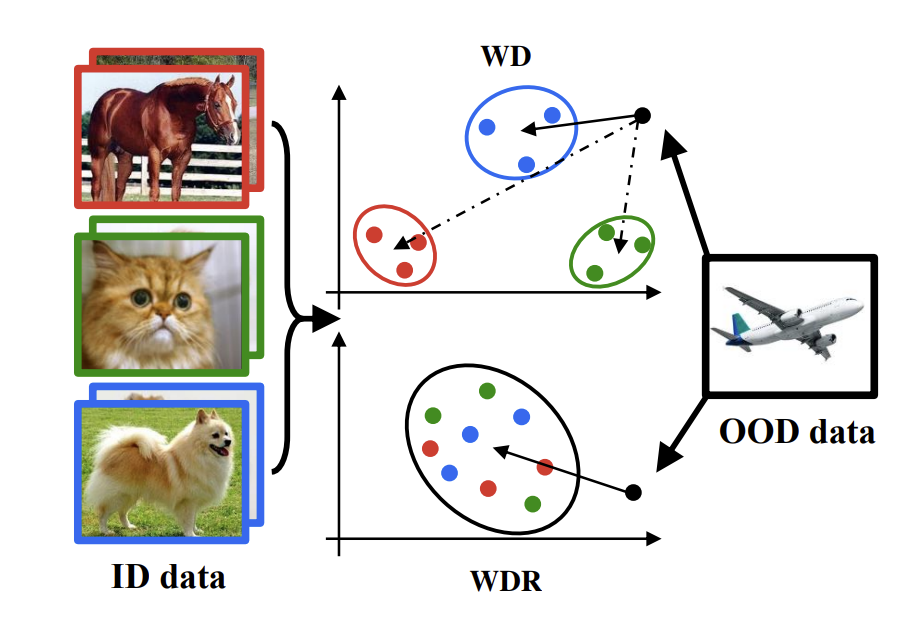

- Topic: Uncertainty estimation on deep vision models for quantifying the robotic action reliability.

- Seattle, WA, United States.

📝 Publications

(* denotes equal contribution)

SG2: Schema-Guided Scene-Graph Reasoning based on Multi-Agent Large Language Model System

Yiye Chen, Harpreet Sawhney, Nicholas Gyde, Yanan Jian, Jack Saunders, Patricio A. Vela, Benjamin Lundell

Code(Coming Soon) | Project(Coming Soon)

- A schema-guided multiagent LLMs framework for iterative reasoning and planning on scene graphs.

WDiscOOD: Out-of-Distribution Detection via Whitened Linear Discriminant Analysis

Yiye Chen, Yunzhi Lin, Ruinian Xu, Patricio A. Vela

- A visual representation analysis approach to identify when a deep learning model doesn’t know in the open-world setting.

- Showing effectiveness in various vision backbones, including ResNet, Vision Transformer, and CLIP vision encoder.

Planning with Language Models through Iterative Energy Minimization

Hongyi Chen*, Yilun Du*, Yiye Chen*, Patricio A. Vela, Joshua B. Tenenbaum

- An energy-based learning and interative sampling method for action sequence planning with Transformer model.

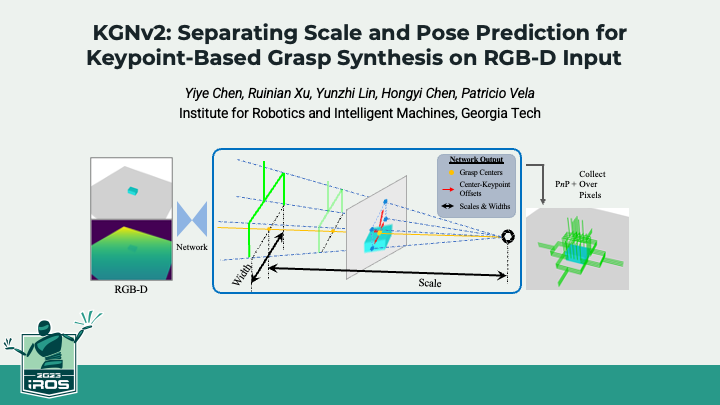

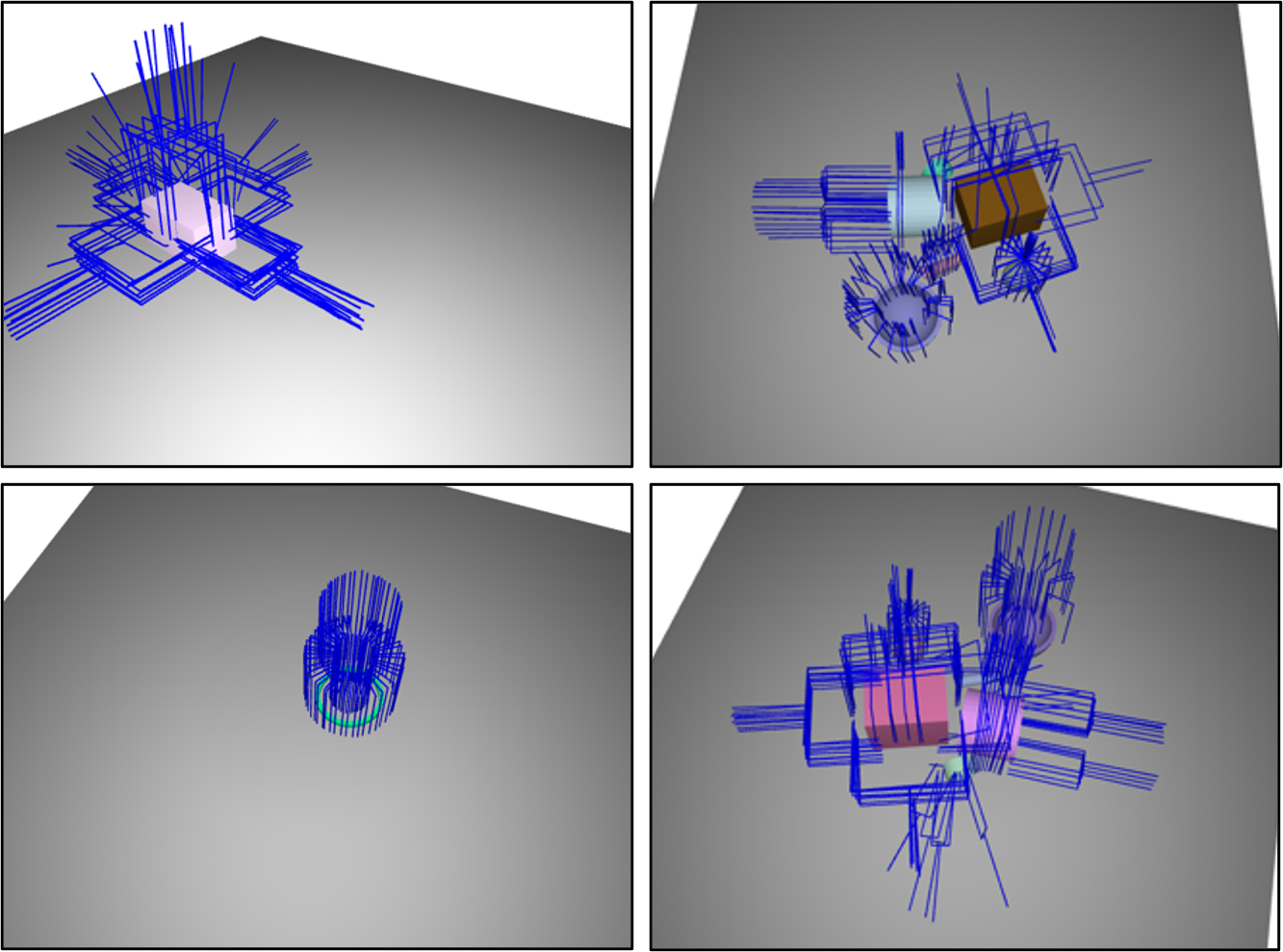

KGNv2: Separating Scale and Pose Prediction for Keypoint-based 6-DoF Grasp Synthesis on RGB-D input

Yiye Chen; Ruinian Xu; Yunzhi Lin; Hongyi Chen; Patricio A. Vela

Code | Presentation | Poster | Supplementary

- Enhances Keypoint-GraspNet (see below) by addressing scale-related issues, where scale refers to the distance of a pose towards the single-view camera.

Keypoint-GraspNet: Keypoint-based 6-DoF Grasp Generation from the Monocular RGB-D input

Yiye Chen; Yunzhi Lin; Ruinian Xu; Patricio A. Vela

Code | Presentation | Poster | Supplementary

- A keypoint-based approach for generating 6-DoF grasp poses from single-view RGB-D input.

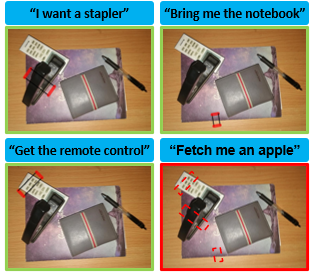

A Joint Network for Grasp Detection Conditioned on Natural Language Commands

Yiye Chen; Ruinian Xu; Yunzhi Lin; Patricio A. Vela

- A language-conditioned robotic grasping method by fusing the visual and language embeddings.

- GASP: Gaussian Avatars with Synthetic Priors, Jack Saunders, Charlie Hewitt, Yanan Jian, Marek Kowalski, Tadas Baltrusaitis, Yiye Chen, Darren Cosker, Virginia Estellers, Nicholas Gydé, Vinay Namboodiri, Benjamin Lundell, CVPR 2025.

- Simultaneous Multi-Level Descriptor Learning and Semantic Segmentation for Domain-Specific Relocalization, Xiaolong Wu*, Yiye Chen*, Cédric Pradalier, Patricio A. Vela, ICRA 2021.

📜 Academic Services

- Conference Reviewer: IROS’23-24, ICRA’24, CVPR’24-25, ICLR’25

- Journal Reviewer: The International Journal of Robotics Research (IJRR), IEEE Robotics and Automation Letters (RA-L), IEEE Transactions on Industrial Electronics (TIE)